Principles

- Understand integration with third-party partner tools and systems using NSX REST APIs

- Determine integration with third-party services

- Network services

- Security services

- Load Balancing

- Anti-malware

- IDS/IPS

- Determine integration with third-party hardware

- Network Interface Cards (NICs)

- Terminating overlay networks

- HW VTEP

- VXLAN offload

- RSS

- Install/register a third-party service with NSX

References

- NSX Administration Guide

- vmw-nsx-palo-alto-networks-solution-brief.pdf

- vmware-nsx-on-cisco-n7kucs-design-guide.pdf

- vmware-vsphere-pnics-performance-white-paper.pdf

- WP-Accelerating_Network_Virtualization_Overlays_for_VXLAN.pdf

- overlay-networks-using-converged-network-adapters-brief.pdf

- Understanding Hardware VTEP

https://www.relaxdiego.com/2014/09/hardware_vtep.html

Understand integration with third-party partner tools and systems using NSX REST APIs

Service Integration

NSX Integrates with 3rd party network solutions e.g. Palo Alto.3rd party endpoint and network introspection services are available to monitor and control VMs and traffic flow.

- Partner Services register with NSX Manager through the REST API

- Services may be registered directly from the partner solution or directly through the NSX Manager UI

- Once a service is registered, the NSX Controller deploys the partner security VM to each ESXi host in the selected cluster

- Security Policies configured through Service Composer are distributed to the security VMs

- Network traffic is steered towards Security VMs for further inspection and policing as dictated by the security policies in force for a given VM

Distributed Service Insertion

With Distributed Service Insertion, all the required security modules and VMs reside on a single ESXi host. When the service is deployed through NSX Manager, the same configuration is replicated to all ESXi hosts within the selected cluster. By placing the services modules on each host, when traffic is steered or redirected from a VM towards a service VM, it does not leave the local host, thereby improving performance. Should a VM be migrated with vMotion, the VM security state is transferred alongside by the local security VM to the destination hosts security VM, thereby maintaining a consistent security posture. Solutions using this approach:

- Intrusion Detection Services (IDS)

- Intrusion Prevention Services (IPS)

- Anti-Virus

- File Identity Monitoring (FIM)

- Vulnerability Management

Edge-Based Service Insertion

The NSX Edge Service Gateway also offers Service Insertion for Application Delivery Controllers and Load Balancers. Traffic can be directed to 3rd party vendor services in a similar manner to IDS/IPS services described above, except in this case the service is consumed through the ESG Load Balancer configuration rather than the Security Composer.

Determine integration with third-party services

A sample set of services is described below. For a full list see the VMware website: https://www.vmware.com/products/nsx/technology-partners.html

Network Services

Hardware Gateways are used to bridge NSX VXLANs to VLANs a physical networks. Various vendors provide VXLAN Gateways including:

- Brocade: VCS Gateway on VDX 6740/6740T switches

- Arista: Native VXLAN support in Arista EOS switches combined with CloudVision Management Platform for NSX Integration

- Dell: S6000 Network Switch providing VXLAN Gateway functionality and direct integration with NSX

Security services, IDS/IPS, Anti-Malware

Palo-Alto

- Integration with Palo-Alto VM-Series

- Traffic is steered towards PA VM-Series service VMs for deep packet inspection and security policy enforcement

Palo-Alto Panorama registers VM-Series partner service with NSX Manager after which NSX Manager automatically deploys VM-Series VMs to the appropriate ESXi hosts.

- Security Groups configured in PA Panorama are automatically reflected in NSX.

- Security Group membership is configured directly in NSX Manager

- Security Policies configured in Panorama are also reflected in NSX

- Panorama automatically creates the necessary traffic redirection polices in NSX to steer traffic towards Palo-Alto VM-Series

Fortinet

Fortinet NSX Service Integration works in a similar manner to Palo-Alto. Fortigate VMX security nodes are automatically deployed on ESXi hosts through the usual service registration procedure.

- Fortigate-VMX Service Manager registers the Fortinet Security Service with NSX Manager

- NSX Manager deploys Fortinet Security Nodes to the appropriate ESXi Hosts

- Fortigate-VM Service Manager supplies service definitions to NSX Manager

- NSX Manager is responsible for Security Group membership and updates Fortigate in real time

- Security Policies configured in Fortigate Service Manager are enforced through traffic steering from NSX towards Fortigate

Checkpoint

The Checkpoint integration is composed of two components:

- vSEC Controller

- vSEC Gateway

The vSEC Controller integrates with NSX as a Security Service.

The vSEC Gateway is a Service VM (SVM) deployed on each ESXi Host through NSX Manager

Management policies are configured with the Checkpoint Smart Management Server which can manage both Physical and Virtual Checkpoint appliances.

The vSEC Controller learns about the Virtual environment from NSX Manager. The information gleaned from NSX Manager regarding Security Groups and membership is then used to configure security policies in the SmartConsole Management Client installed on the vSEC Gateways. As with the other solutions, changes to Security Group membership are automatically reflected from NSX towards the Checkpoint vSEC Controller and hence security policies updated automatically.

Load Balancing

F5 Networks

The NSX -> F5 Integration works in a similar manner to the security services integration in that an external service is first registered with NSX and then consumed by a component within the NSX environment. In the case of security services integration, the external services are consumed through the Service Composer security policy framework. In the case of F5, the BIG-IQ Cloud Integration the external service is consumed through an NSX Load Balancer configured on an NSX Edge Services Gateway.

Catalog services are first configured in the BIG-IQ portal to be consumed through NSX. When the Load Balancer is enabled on an NSX ESG, the “Service Insertion” checkmark is selected to present F5 services to that ESG.

Load Balancer Pools and VIPs configured through NSX are then provisioned through BIG-IQ i.e. the service integration results in on-demand BIG-IP VE appliances to implement the desired load balancing configuration. Additionally, the service can present configuration options defined in F5 iApps Policies in the NSX GUI.

Determine integration with third-party hardware

Network Interface Cards (NICs)

There are no special NIC requirements for NSX other than the adapter should be able to support VXLAN frames on an Ethernet segment with an MTU of 1572 bytes although 1600 is recommended. However a number of network vendors have implemented features in Network Interface Cards to improve VXLAN performance including:

- VXLAN Offload

- Receive Side Scaling (RSS)

- Hardware VTEP (HW Gateways)

VXLAN Offload

Network Adapters often offer performance improvements through a process known as TCP Segmentation Offload (TSO)

TSO is used to improve transmission performance whereby a physical network adapter offloads the segmentation and CRC computation tasks from a Hypervisor or other host native application by presenting a buffer socket. The application – and hence Host CPU – is relieved of the tedious task of splitting or segmenting large datastreams into smaller MTU sized packets along with the associated CRC. Packet segmentation is effectively delegated to the network adapter to be performed in hardware.

VXLAN traffic is not able to benefit from this feature in its native form because VXLAN datagrams are UDP encapsulated, whereas TSO works for TCP packets. Several manufactures now also support a variant of TSO called VXLAN Offload. As well as supporting UDP, VXLAN Offload also inspects the inner packet header to determine the L2 destination MAC Address. Recall that the outer VXLAN UDP header refers to the VTEP MAC. Therefore traffic destined for multiple VMs behind the same VTEP endpoint will share the same outer destination MAC address. Because offloading allocates traffic into threads based on destination MAC address, the traditional TSO technique would not fully utilise the available bandwidth with VXLAN traffic. By inspecting the inner destination MAC, VXLAN Offload is able to better distribute traffic amongst the available threads and hence improve both throughput and reduce Host CPU utilisation.

Receive Side Scaling (RSS)

Packet queueing is performed on incoming traffic streams to improve performance. Typically MAC address filters are used to queue inbound packets. This can be performance limiting because all traffic destined towards a particular VM is placed on a single queue. In a VXLAN environment the problem is exacerbated by the fact that multiple VMs may reside a single VTEP and hence single MAC address.

RSS improves inbound packet handling by distributing traffic based on flows rather than simply MAC addresses. As with VXLAN Offload, newer implementations of RSS are able to take both the outer and inner MAC Addresses into account when computing traffic flows, thereby exposing VM->VM traffic at a more granular level. This allows the traffic to be more evenly distributed to inbound queues and Host CPUs. In so doing not only is traffic flow improved, Host CPU utilisation is also reduced.

Hardware VTEP

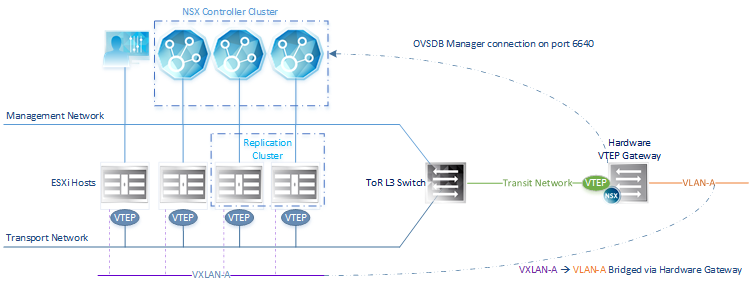

Hardware VTEPS are used for Layer 2 Bridging between VXLANs in a virtual environment and VLANs in a physical environment. The Open vSwitch Database (OVSDB) protocol is used for this purpose. The OVSDB database has information on both the Physical and Virtual networks and is therefore central to the bridging process. The following components are required for Hardware VTEP configuration:

- Hardware VTEP Gateway compatible with NSX

The Hardware gateway presents a VTEP to the virtual network via the NSX Controllers

- Physical Host connected to a VLAN behind the Hardware Gateway

- Layer 3 Transit Network

- ESXi Hosts: at least 4

- 2 hosts allocated for HW VTEP Replication

- NSX Controller cluster

The Controllers use Port 6640 to communicate with the Hardware Gateway and exchange OVSDB transactions.

Note: The Hardware Gateway must reside on a separate network to the ESXi Hosts. In the diagram below, the Transport & Transit networks are separated by a ToR L3 Switch. Effectively this means the Hardware Gateways and ESXi Hosts use separate Transport Networks.

Additionally, a group of hosts in the NSX environment are allocated for configuration purposes. This is done through the “Service Definitions” screen in NSX Manager as shown below. A minimum of 2 hosts is recommended for replication which serve to handle Broadcast, Unknown Unicast and Multicast (BUM) traffic from the Hardware Gateway towards the virtual environment.